Tutorial: Machine Learning based Online Abuse Defense: Platform, Research, and Hands-on Labs

Abstract

Artificial Intelligence (AI) technologies have become increasingly pervasive in our daily lives. Recent breakthroughs such as large language models (LLMs) are being increasingly used to enhance work methods and boost productivity globally. However, the advent of these technologies has brought new challenges in the realm of social cybersecurity. While AI has broadened new frontiers in addressing social issues, such as cyberharassment and cyberbullying, it has also worsened the existing issues such as the generation of hateful content, bias, and demographic prejudices. Although the interplay between AI and social cybersecurity has gained much attention from the research community, very few educational materials have been designed to engage students by integrating AI and socially-relevant cybersecurity through an interdisciplinary approach. In this tutorial, we will present:

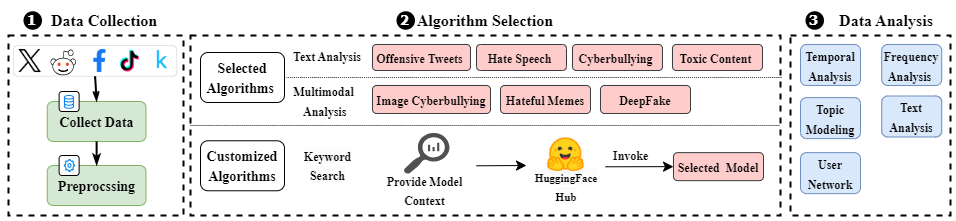

- A comprehensive overview of the Integrative Cyberinfrastructure for Online Abuse Research (ICOAR) platform, showcasing its capabilities in supporting various research.

- Research case studies demonstrating the practical application of ICOAR in addressing complex challenges in social cybersecurity.

- We introduce Cyberbullying Labs, an innovative educational module designed to provide hands-on experience in applying AI technologies to combat cyberbullying.

- Offensive Tweets: Analysis of COVID-related offensive tweets on Twitter through a large-scale data-driven study, leveraging machine learning techniques to classify the nature and target of offensive content, and employing statistical and network analysis methods to understand the dynamics of how such content spreads and evolves over time. Liao et al. 2023

- Hate Speech: Detecting and mitigating online hate by leveraging the advanced reasoning capabilities of these models, coupled with zero-shot learning for prompt-based detection. This approach allows for the dynamic updating of detection prompts to address evolving forms of hate speech effectively, showcasing significant improvements in detecting online hate compared to existing tools.

- Image Cyberbullying: Identifying and classifying cyberbullying in images through unique visual factors such as body pose, facial emotion, objects, and social context, enables a more nuanced understanding of cyberbullying incidents. Vishwamitra et al. 2021

- Hateful Memes: Detecting COVID-19-related hateful memes using Vision and Language (V&L) models. It explores the generalizability of these models to new types of hateful memes, showing a significant preference for visual information over textual. Guo et al. 2022

Prerequisites

- No extensive knowledge or experience in technical areas is required for using the ICOAR platform.

- Non-CS students do not need advanced knowledge in AI or cybersecurity.

- CS students should have basic knowledge of Python programming and a basic understanding of machine learning concepts and model evaluation, though it's not mandatory.

Tutorial Schedule

The tutorial is designed to last 2 hours.